Kubernetes on Hetzner with ingress and certificate

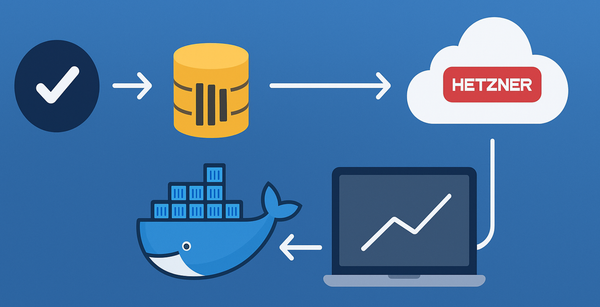

This guide shows how I set up a Kubernetes cluster on Hetzner Cloud using kops, configured NGINX ingress with Hetzner Load Balancer, and enabled TLS certificates via cert-manager.

I have a few websites that I am hosting on Hetzner. Provisioning a managed Kubernetes cluster from a service provider might be more expensive for me than setting it up manually.

Creating the Cluster

I tried first to use Terraform to create me a Kubernetes cluster. I wanted to automate the creation of the VMs, and Kubernetes cluster. I gave that up after having a go at using user data and cloud init scripts. I faced hurdle even getting docker installed and ended up using kops to create me a Kubernetes cluster which turned out really simple.

Since kops requires an S3-compatible state store, and Hetzner’s object storage wasn’t fully compatible, I used DigitalOcean Spaces instead.

Used mise secrets to auto load the environment variables on to the shell when I cd into the Infrastructure repository.

# .env

HCLOUD_TOKEN="xxx"

S3_ENDPOINT="https://lon1.digitaloceanspaces.com"

S3_ACCESS_KEY_ID="xxx"

S3_SECRET_ACCESS_KEY="xxx"

KOPS_STATE_STORE="do://kops-state-store"

S3_REGION="us-east"

S3_FORCE_PATH_STYLE=true# mise.toml

[env]

_.file = ".env"kops create cluster \

--name=example.k8s.local \

--ssh-public-key=~/.ssh/id_ed25519.pub \

--cloud=hetzner \

--zones=hel1 \

--image=ubuntu-24.04 \

--networking=calico \

--network-cidr=10.10.0.0/16 \

--node-size=cax21 \

--control-plane-size=cax11List of Hetzner locations can be found here. VM sizes can be found on the create VM page.

kops update cluster --name example.k8s.local --yes --adminInstalling NGINX Ingress

To expose our services to the internet, we need an ingress controller. We'll use NGINX ingress and a Hetzner load balancer.

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm upgrade --install ingress-nginx ingress-nginx/ingress-nginx \

--namespace "ingress-nginx" \

--create-namespace \

-f ./apps/ingress-nginx/values.yaml# apps/ingress-nginx/values.yaml

controller:

service:

type: LoadBalancer

annotations:

load-balancer.hetzner.cloud/name: ingress-example.k8s.local

load-balancer.hetzner.cloud/location: hel1

load-balancer.hetzner.cloud/type: lb11

load-balancer.hetzner.cloud/ipv6-disabled: true

load-balancer.hetzner.cloud/use-private-ip: truecontroller.service.type of LoadBalancer is going to provision a Hetzner load balancer pointing to the NGINX ingress controller service. use-private-ip makes sure that the Hetzner Load Balancer which should be in the Kubernetes private network should talk to the worker nodes using the k8s network.

Setting Up Cert-Manager

helm upgrade --install \

cert-manager oci://quay.io/jetstack/charts/cert-manager \

--version v1.18.2 \

--namespace cert-manager \

--create-namespace \

--set crds.enabled=trueAt this point we only have cert manager installed. We also need to create Cluster Issuers. I found it best to apply the lets encrypt's staging environment cluster issuer.

kubectl apply \

-f cluster/cert-manager/clusterissuer-lets-encrypt-staging.yaml# cluster/cert-manager/clusterissuer-lets-encrypt-staging.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

server: https://acme-staging-v02.api.letsencrypt.org/directory

email: john.doe@example.com # <- provide your actual email

profile: tlsserver

privateKeySecretRef:

name: letsencrypt-staging

solvers:

- http01:

ingress:

class: nginxAnd for lets-encrypt production Cluster Issuer:

kubectl apply -f cluster/cert-manager/clusterissuer-lets-encrypt.yaml# cluster/cert-manager/clusterissuer-lets-encrypt.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-prod

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: john.doe@example.com # <- provide your actual email

profile: tlsserver

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- http01:

ingress:

class: nginxNow you've got nginx and cert manager installed on a cluster created via kops that uses Hetzner as the cloud provider.

Deploying an Example App

# apps/example/templates/ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

annotations:

kubernetes.io/ingress.class: nginx

cert-manager.io/cluster-issuer: letsencrypt-staging # or prod

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: example

port:

number: 2368

tls:

- hosts:

- example.com

secretName: example-tls # < cert-manager will store the created certificate in this secret.The annotation cert-manager.io/cluster-issuer will trigger cert-manager to create a certificate for the host.

The hosts listed under tls are added to the certificate’s Subject Alternative Names (SANs). cert-manager will store the created certificate in secret specified in spec.tls.hosts[].secretName.

You should configure your DNS provider to point the hostname to the load balancer before applying the helm chart.

helm upgrade --install ghost ./apps/example \

--namespace examplens --create-namespaceWith this setup, you now have a Kubernetes cluster on Hetzner, fronted by an NGINX ingress controller, secured with automatic TLS certificates from Let’s Encrypt. Next, you could extend this by enabling high availability for production workloads, setting up monitoring (e.g., Prometheus + Grafana), or automating cluster provisioning with Terraform.