Set Up Automated ClickHouse Backups for Self-Hosted Plausible Analytics

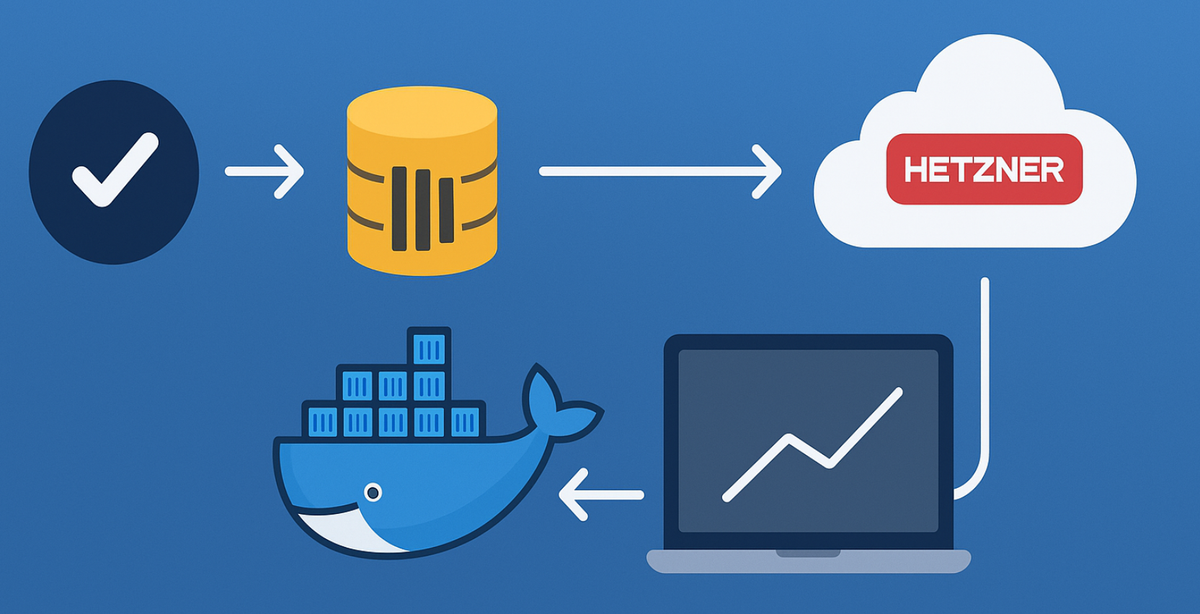

Self-hosting Plausible Analytics? Your ClickHouse events database needs reliable backups. Learn how to automate daily backups to Hetzner object storage using clickhouse-backup and Docker, with automatic retention policies to keep storage costs low.

Introduction

I've been using self-hosted Plausible Analytics community edition to monitor the traffic to my websites for the past six months. It’s a free, open-source, GDPR-compliant, and cookie-free alternative to Google Analytics. They also offer a managed hosting option that you may want to trial out.

Analytics data is surprisingly valuable - it informs product decisions, marketing strategies, and business planning. While it might seem less critical than user data or transactions, losing years of traffic patterns and insights can be genuinely painful. Regular automated backups are cheap insurance against a variety of failure scenarios.

For me, the primary reason for automating the backup is to move the database to another shared cluster that I've set up in Kubernetes.

This blog post will guide you through what you need to automate the backing up of the ClickHouse database using a tool called clickhouse-backup from the folks at Altinity. We'll be running this tool inside a Docker container to minimise the setup required to get this working. At the end, you will have a scheduled job that runs daily, backing up the database into Hetzner Object Storage or any S3-compliant storage.

Note that since version 21.8, ClickHouse can write backups directly to S3-compatible storage by defining a remote disk in its configuration.

Prerequisites

To follow along with the post:

- I'll assume that you've already got plausible analytics self-hosted or are setting it up.

- To use Hetzner Object Storage, you'll of course need an account. You should be able to use any S3 API compatible storage backend, such as AWS S3, DigitalOcean Spaces, Minio etc.

- Finally, you'll need access to SSH into the VM or server that's currently set up to run Plausible and contains the ClickHouse database. I followed the Docker Compose instructions to self-host Plausible Analytics. This machine, therefore, has both Docker and Docker Compose installed.

All commands in this post are being executed in the VM or Server that's running the ClickHouse database in a Docker container.

Configuration setup

The initial step for us is to figure out what the clickhouse-backup config.yml file should look like.

You can run the following command to see the default configuration values for clickhouse-backup and get an overview of what configuration parameters are available to change.

docker run -it --rm altinity/clickhouse-backup print-configI also found reading through the documentation from the clickhouse-backup repository a good way of getting an overview. The repository also includes some examples of scenarios for you to try out.

Create a config.yml file at /opt/clickhouse-backup folder.

mkdir -p /opt/clickhouse-backup && \

touch /opt/clickhouse-backup/config.yml && \

cd /opt/clickhouse-backupModify the file using nano or vim to have the following content:

# /opt/clickhouse-backup/config.yml

general:

remote_storage: s3

backups_to_keep_local: 7

backups_to_keep_remote: 90

clickhouse:

username: default

password: ""

host: plausible-ce-plausible_events_db-1

port: 9000

s3:

access_key: YOUR_ACCESS_KEY

secret_key: YOUR_SECRET_KEY

bucket: YOUR_S3_BUCKET_NAME

endpoint: https://xxx.your-objectstorage.com

path: plausible-clickhouse-backups

use_path_style: trueLet's go through the file one section at a time. I'd recommend reading the documentation in their GitHub repository to understand all the values available and for a summary.

General

remote_storage: is going to bes3in our case.backups_to_keep_local: The default value is0, which keeps local backups forever. I've been going with7it since I'll be setting up a scheduled job that backs up the database once every day, and I want to keep a local copy of the backup for a week.backups_to_keep_remote: The default value is0, which keeps the files around forever in the remote storage. I went with90it since I want back-ups to remain in the S3 bucket for about 3 months. Pick a value that suits your needs.

Clickhouse

usernameandpassword: I hadn't changed the default username and password for ClickHouse after I went through Plausible installation. If you have, change this to those credentials.host: This is going to be the name of the container that's running the ClickHouse database. For me, this wasplausible-ce-plausible_events_db-1. The Docker container that will be running the clickhouse-backup tool will be able to resolve this hostname after we tell Docker to run the tool in the same network as the database.

You can confirm the name of the container running the ClickHouse database by executing:

docker ps --format "table {{ .Image }}\t{{.Names}}"ghcr.io/plausible/community-ed plausible-ce-plausible-1

clickhouse/clickhouse-server:2 plausible-ce-plausible_events_db-1

postgres:16-alpine plausible-ce-plausible_db-1port:9000It is the default HTTP port for ClickHouse.

s3

access_keyandsecret_key: Generate or use existing credentials from the Hetzner console or whatever is equivalent in your storage provider of choice, and add them here.bucket: name of your S3 bucketendpoint: For me, this washttps://hel1.your-objectstorage.com` wherehel1This is the Helsinki location I've chosen for the storage.path: This is the location inside the S3 bucket where the backup will be uploaded to by the tool.use_path_style: Needs to be set totrueAs it forces the S3 client to place the bucket name in the path instead of the hostname, making it compatible with Hetzner’s S3 API.

Note that the default config skips the tables. system.*, INFORMATION_SCHEMA.*, information_schema.*, and _temporary_and_external_tables.*.

clickhouse-backup run command

To construct the run command, there are a couple of values we need to get by inspecting the Docker environment.

Network

Take note of the name of the network Docker uses for running the plausible services.

docker network listNETWORK ID NAME DRIVER SCOPE

8a8ffb8fe710 bridge bridge local

ecc836139aef host host local

f87c584b2276 none null local

47ead8c71f8c plausible-ce_default bridge localIn this case, it's plausible-ce_default.

Understanding the volumes

Get the list of Docker volumes and find the name of the volume that Plausible is using for ClickHouse server data.

docker volume listDRIVER VOLUME NAME

local plausible-ce_db-data

local plausible-ce_event-data

local plausible-ce_event-logs

local plausible-ce_plausible-dataplausible-ce_db-data→ very likely Postgres data (for Plausible’s metadata).plausible-ce_event-data→ This should be ClickHouse data (events storage).plausible-ce_event-logs→ ClickHouse logs (log tables/system logs).plausible-ce_plausible-data→ Plausible app itself (uploads, configs, maybe ephemeral stuff).

👉 The critical one for clickhouse-backup is plausible-ce_event-data, since that holds /var/lib/clickhouse.

You can double-check which volume the container mounts by running:

docker inspect plausible-ce-plausible_events_db-1 | grep -A3 MountsYou should see something like:

"Mounts": [

{

"Type": "volume",

"Source": "plausible-ce_event-logs",

--

"Mounts": [

{

"Type": "bind",

"Source": "/opt/plausible-ce/clickhouse/logs.xml",Manual backup test

Now that we've got the clickhouse-backup configuration file and the necessary values for the docker run command, we can create a backup manually.

docker run --rm \

--volume $(pwd)/config.yml:/etc/clickhouse-backup/config.yml \

--volume plausible-ce_event-data:/var/lib/clickhouse \

--network plausible-ce_default \

altinity/clickhouse-backup:latest create plausible-test-backupWe are mounting 2 volumes, the first one is the config.yml file that we created earlier in the host. And the second one is the volume that's attached to the ClickHouse server for the database.

List the backup to verify that a local backup was created by the previous command.

docker run --rm \

--volume $(pwd)/config.yml:/etc/clickhouse-backup/config.yml \

--volume plausible-ce_event-data:/var/lib/clickhouse \

--network plausible-ce_default \

altinity/clickhouse-backup:latest listYou should see something like:

plausible-test-backup 2025-09-30 11:41:47 local all:3.15MiB,data:3.13MiB,arch:0B,obj:0B,meta:19.33KiB,rbac:0B,conf:0B,nc:0B regularNow that a backup was created by the tool, we can execute the second command that will upload the backup to the S3 bucket at the specified path.

docker run --rm \

--volume $(pwd)/config.yml:/etc/clickhouse-backup/config.yml \

--volume plausible-ce_event-data:/var/lib/clickhouse \

--network plausible-ce_default \

altinity/clickhouse-backup:latest upload plausible-test-backupVerify the backup appears in your chosen Hetzner bucket.

Automated scheduling

Let's modify one of the example backup shell scripts. From the clickhouse-backup repository, to make use of the command we've put together so far.

Create a new file with the name daily_backup.sh at /opt/clickhouse-backup/daily_backup.sh.

#!/bin/bash

BACKUP_NAME=plausible_analytics_ce-daily-$(date -u +%Y-%m-%dT%H-%M-%S)

docker run --rm \

--volume $(pwd)/config.yml:/etc/clickhouse-backup/config.yml \

--volume plausible-ce_event-data:/var/lib/clickhouse \

--network plausible-ce_default \

altinity/clickhouse-backup:latest create $BACKUP_NAME \

>> /var/log/clickhouse-backup.log 2>&1

exit_code=$?

if [[ $exit_code != 0 ]]; then

echo "clickhouse-backup create $BACKUP_NAME FAILED and return $exit_code exit code"

exit $exit_code

fi

docker run --rm \

--volume $(pwd)/config.yml:/etc/clickhouse-backup/config.yml \

--volume plausible-ce_event-data:/var/lib/clickhouse \

--network plausible-ce_default \

altinity/clickhouse-backup:latest upload $BACKUP_NAME \

>> /var/log/clickhouse-backup.log 2>&1

exit_code=$?

if [[ $exit_code != 0 ]]; then

echo "clickhouse-backup upload $BACKUP_NAME FAILED and return $exit_code exit code"

exit $exit_code

fi/opt/clickhouse-backup/daily_backup.sh

Make sure the script is executable.

chmod +x /opt/clickhouse-backup/daily_backup.sh

Now it’s just a matter of wiring it into cron. Edit the root crontab (since backups usually need elevated privileges for Docker):

sudo crontab -e

At the bottom, add the cron entry

0 2 * * * /opt/clickhouse-backup/daily_backup.sh

This means:

0→ minute (0)2→ hour (2 AM)* * *→ every day, every month, every day of the week

So it will run daily at 02:00 UTC (server time).

Redirect logs (optional)

You’re already writing Docker output into /var/log/clickhouse-backup.log.

Cron itself will email errors (if MAILTO is set), but you can also pipe stderr explicitly:

0 2 * * * /opt/clickhouse-backup/daily_backup.sh >> /var/log/cron-clickhouse-backup.log 2>&1

This way, you also capture script-level errors (e.g., “FAILED and return exit code”).

Verify cron is active

List jobs with:

sudo crontab -l

Check logs in /var/log/syslog (or /var/log/cron.log depending on the distro) to see if it triggered:

grep CRON /var/log/syslog

✅ That’s it! Now your backup will run automatically at 2 AM every day.